Gemini Is Cooking Bananas Under Antigravity

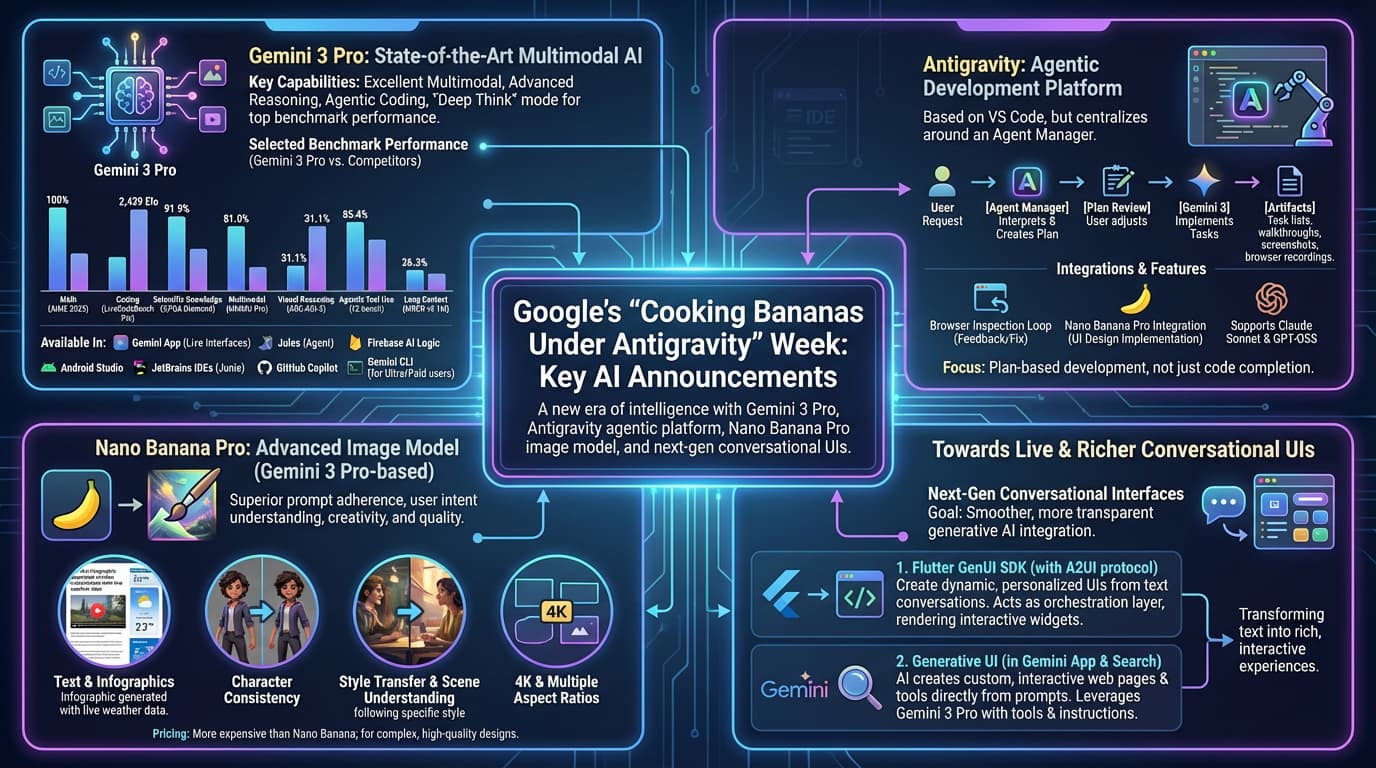

What a wild title, isn’t it? It’s a catchy one, not generated by AI, to illustrate this crazy week of announcements by Google. Of course, there are big highlights like Gemini 3 Pro, Antigravity, or Nano Banana Pro, but not only, and this is the purpose of the article to share with you everything, including links to all the interesting materials about those news.

Gemini 3 Pro

The community was eagerly anticipating the release of Gemini 3. Gemini 3 Pro is a state-of-the-art model, with excellent multimodal capabilities, advanced reasoning, excellent at coding, and other agentic activities.

You’ll see below the results on various benchmarks, which are quite impressive,

and represents a significant leap forward on some of them:

Here are a few pointers with more details:

- A new era of intelligence with Gemini 3 with lots of illustrations of what Gemini 3 can do, and also the mention of Gemini 3 Deep Think, which ranks super high on ARC-AGI-2 or Humanity’s Last Exam benchmarks.

- Start building with Gemini 3 mentioning agentic coding, Antigravity (we’ll come back to it), vibe coding, visual, video and spatial reasoning.

- Gemini 3 brings upgraded smarts and new capabilities to the Gemini app which discusses what Gemini 3 brings inside the Gemini app with live generative interfaces (generating live UIs on the fly!)

- All the articles about Gemini 3 which lists all the official blog posts about the launch of Gemini 3, if you want to dive deeper.

Available In Many Places

Gemini 3 Pro is available in other places:

- in Jules the asynchronous coding agent,

- in Firebase AI Logic,

- in Android Studio,

- in JetBrains IDEs in Junie and the AI chat,

- and inside GitHub Copilot.

Available In Gemini CLI

Gemini CLI has also been updated to take advantage of Gemini 3 Pro.

Be sure to read this document which explains how to access Gemini 3 Pro in Gemini CLI, as it’s available to Google AI Ultra users, and paid Gemini and Vertex API key holders, so if you’re not in these categories, you might want to wish the waitlist to experience all the fun!

Check out this article as well:

- 5 things to try with Gemini 3 Pro in Gemini CLI on how to setup Gemini 3, how to turn visual ideas into working apps, how to generate shell commands from natural language, or accurate documentation from your codebase, and how it can help you debug issues.

Antigravity

Now let’s move on to Antigravity, a new agentic development platform, based on VS Code. Of course, you have all the usual functionalities of a text editor, with (smart) code completion, and all. However the interesting aspect of Antigravity is that the main window is actually not the IDE, but the central place where things happen is the agent manager, where you’ll launch your requests. They will be interpreted and will trigger the creation of a plan, with various steps. You’ll be able to comment and review the plan, for further adjustments. Then Gemini 3 will handle the implementation of those tasks. And Antigravity will produce various other artifacts along the way, like the task lists, the walkthroughs, screenshots and even browser recordings.

It’s also possible to use Claude Sonnet and GPT-OSS, so this product is not limited to just Gemini 3, however good it may be.

What I find impressive is the nice integration with the browser, to inspect and see how the implementation looks like, and further loop back to continue improving it or fixing it if it’s not what the user asked.

I haven’t covered Nano Banana Pro yet, but with that image generation and editing model integrated in Antigravity, you’re able to create designs, update them visually with manual squiggles and such, and have Antigravity implement that design for you!

Articles to dive more:

- Introducing Google Antigravity, a New Era in AI-Assisted Software Development

- Build with Google Antigravity, our new agentic development platform, the blog post announcing Antigravity

- Getting Started to know how to get your environment ready and configured

- Nano Banana Pro in Google Antigravity dives into the integration with Nano Banana to generate UI mockups, incrementally, and get your design implemented.

- Antigravity YouTube Channel with plenty of small videos, including a longer one to learn the basics, and another short one demonstrating the Nano Banana integration.

- Tutorial : Getting Started with Google Antigravity by my awesome colleague Romin Irani who wrote a very detailed step-by-step tutorial to get started easily, and who also created a codelab with precise instructions to go through.

Nano Banana Pro

I wrote about Nano Banana in previous articles showing how to call it from a Javelit frontend, how to create ADK agents with Nano Banana, and simply how to call Nano Banana from Java.

I was already super impressed with its capabilities in terms of image edition. However if I wanted the best quality, I would usually start with an Imagen generation, then I’d iterate with Nano Banana for editing. But now, Nano Banana Pro is another level above both Imagen 4 Ultra and the original Nano Banana, in terms of prompt adherence, understanding of user intent, creativity, and quality of generation.

When you use it, you’ll notice how great it is at text, even lots of text! It made huge leaps in terms of typography. And what’s crazy, with the fact it’s based on Gemini 3 Pro, is that it’s able to understand articles or videos, and generate detailed and precise infographics about them! It’s connected to Google Search, and it can research, for example, the weather in Paris, and create a diagram with live data! I’ll certainly come back to that topic in forthcoming articles.

For example, here’s some infographics that Nano Banana Pro created to summarize this article:

You can mix ingredients together like different characters (with character consistency), use them for some kind of transfer learning to follow a certain style.

It has a high level of understanding of scenes and can easily change the lighting, the angle view.

You can generate images up to 4K! And have a wide range of aspect ratios to choose from.

Pay attention to the pricing, however, as it’s more expensive than Nano Banana. So for small edits, maybe you’ll stick with Nano Banana, but when you want the most complex design and quality, choose Nano Banana Pro.

Some links to dive deeper:

- Introducing Nano Banana Pro, the blog post.

- Build with Nano Banana Pro, our Gemini 3 Pro Image model, another official blog post on the launch.

- https://deepmind.google/models/gemini-image/pro/, DeepMind’s product page showing some impressive examples of usage of the model.

- Gemini 3 Pro Image Model Card for all the technical details of the model.

- Testing Gemini 3 Pro Image Model written by my awesome colleague Laurent Picard who demonstrates character consistency and the high quality of generated images.

- 7 tips to get the most out of Nano Banana Pro to get the best out of the model.

Towards Live And Richer Conversational UIs

I wanted to finish this overview of the announcements of the week with something that you might not have heard of, but which I think is interesting for the future of generative AI and conversational interfaces.

In my talks, at meetups, in conversations with developers, I often explain that imposing chatbots everywhere is not the best use and ideal integration of AI in their applications, and that more transparent and seamless generative AI integrations are preferred for ensuring their success with users and customers.

I think the following two projects are helping towards a smoother integration of generative AI:

The GenUI SDK for Flutter allows developers to create dynamic, personalized user interfaces using LLMs, transforming text-based conversations into rich, interactive experiences. It acts as an orchestration layer, sending user prompts and available widgets to an AI agent which generates content and a suitable UI description. The SDK then deserializes and dynamically renders this UI into interactive Flutter widgets, with user interactions triggering subsequent updates. This system relies on the (upcoming) A2UI protocol.

Generative UI enables AI models to create custom, interactive user experiences, like web pages and tools, directly from any prompt. This dynamic capability is rolling out in the Gemini app and Google Search’s AI Mode, leveraging Gemini 3 Pro with tool access and detailed instructions. Users prefer these outputs over standard AI text, though human-expert designs remain slightly favored. Despite current challenges with speed and occasional inaccuracies, Generative UI signifies a major step toward fully AI-generated and adaptive interfaces.

Now, Your Turn To Have Fun!

With all those announcements, and key pointers to learn more about them, I hope you’re ready to build exciting new things with Gemini 3 Pro, Antigravity, Nano Banana Pro, and more!