Visualize PaLM-based LLM tokens

As I was working on tweaking the Vertex AI text embedding model in LangChain4j,

I wanted to better understand how the textembedding-gecko

model

tokenizes the text, in particular when we implement the

Retrieval Augmented Generation approach.

The various PaLM-based models offer a computeTokens endpoint, which returns a list of tokens (encoded in Base 64)

and their respective IDs.

At the time of this writing, there’s no equivalent endpoint for Gemini models.

Read more...Creating kids stories with Generative AI

Last week, I wrote about how to get started with the PaLM API in the Java ecosystem, and particularly, how to overcome the lack of Java client libraries (at least for now) for the PaLM API, and how to properly authenticate. However, what I didn’t explain was what I was building! Let’s fix that today, by telling you a story, a kid story! Yes, I was using the trendy Generative AI approach to generate bedtime stories for kids.

Read more...Getting started with the PaLM API in the Java ecosystem

Large Language Models (LLMs for short) are taking the world by storm, and things like ChatGPT have become very popular and used by millions of users daily. Google came up with its own chatbot called Bard, which is powered by its ground-breaking PaLM 2 model and API. You can also find and use the PaLM API from withing Google Cloud as well (as part of Vertex AI Generative AI products) and thus create your own applications based on that API. However, if you look at the documentation, you’ll only find Python tutorials or notebooks, or also explanations on how to make cURL calls to the API. But since I’m a Java (and Groovy) developer at heart, I was interested in seeing how to do this from the Java world.

Read more...Build and deploy Java 17 apps on Cloud Run with Cloud Native Buildpacks on Temurin

In this article, let’s revisit the topic of deploying Java apps on Cloud Run. In particular, I’ll deploy a Micronaut app, written with Java 17, and built with Gradle.

With a custom Dockerfile

On Cloud Run, you deploy containerised applications, so you have to decide the way you want to build a container for your application. In a previous article, I showed an example of using your own Dockerfile, which would look as follows with an OpenJDK 17, and enabling preview features of the language:

Read more...A Cloud Run service in Go calling a Workflows callback endpoint

It’s all Richard Seroter’s fault, I ended up dabbling with Golang! We were chatting about a use case using Google Cloud Workflows and a Cloud Run service implemented in Go. So it was the occasion to play a bit with Go. Well, I still don’t like error handling… But let’s rewind the story a bit!

Workflows is a fully-managed service/API orchestrator on Google Cloud. You can create some advanced business workflows using YAML syntax. I’ve built numerous little projects using it, and blogged about it. I particularly like its ability to pause a workflow execution, creating a callback endpoint that you can call from an external system to resume the execution of the workflow. With callbacks, you’re able to implement human validation steps, for example in an expense report application where a manager validates or rejects an expense from someone in their team (this is what I implemented in this article).

Read more...Skyrocketing Micronaut microservices into Google Cloud

Instead of spending too much time on infrastructure, take advantage of readily available serverless solutions. Focus on your Micronaut code, and deploy it rapidly as a function, an application, or within a container, on Google Cloud Platform, with Cloud Functions, App Engine, or Cloud Run.

In this presentation, you’ll discover the options you have to deploy your Micronaut applications and services on Google Cloud. With Micronaut Launch, it’s easy to get started with a template project, and with a few tweaks, you can then push your code to production.

Read more...Running Micronaut serverlessly on Google Cloud Platform

Last week, I had the pleasure of presenting Micronaut in action on Google Cloud Platform, via a webinar organized by OCI. Particularly, I focused on the serverless compute options available: Cloud Functions, App Engine, and Cloud Run.

Here are the slides I presented. However, the real meat is in the demos which are not displayed on this deck! So let’s have a closer look at them, until the video is published online.

On Google Cloud Platform, you have three solutions when you want to deploy your code in a serverless fashion (ie. hassle-free infrastructure, automatic scaling, pays-as-you-go):

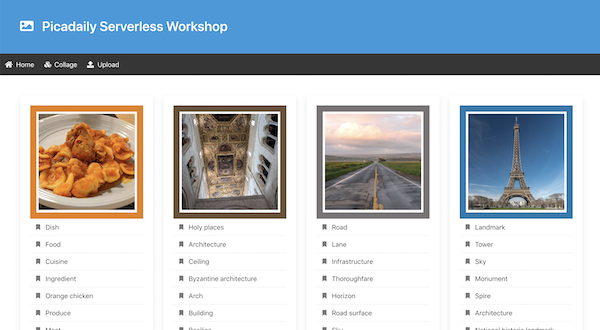

Read more...Video: the Pic-a-Daily serverless workshop

With my partner in crime, Mete Atamel, we ran two editions of our “Pic-a-Daily” serverless workshop. It’s an online, hands-on, workshop, where developers get their hands on the the serverless products provided by Google Cloud Platform:

- Cloud Functions — to develop and run functions, small units of logic glue, to react to events of your cloud projects and services

- App Engine — to deploy web apps, for web frontends, or API backends

- Cloud Run — to deploy and scale containerised services

Start the fun with Java 14 and Micronaut inside serverless containers on Cloud Run

Hot on the heels of the announcement of the general availability of JDK 14, I couldn’t resist taking it for a spin. Without messing up my environment — I’ll confess I’m running 11 on my machine, but I’m still not even using everything that came past Java 8! — I decided to test this new edition within the comfy setting of a Docker container.

Minimal OpenJDK 14 image running JShell

Super easy to get started (assuming you have Docker installed on your machine), create a Dockerfile with the following content:

Read more...Serverless tip #5 — How to invoke a secured Cloud Run service locally

Requirements:

- an existing Google Cloud Platform account with a project

- you have enabled the Cloud Run service and already deployed a container image

- your local environment’s gcloud is already configured to point at your GCP project

By default, when you deploy a Cloud Run service, it is secured by default, unless you use the –allow-unauthenticated flag when using the gcloud command-line (or the appropriate checkbox on the Google Cloud Console).

But once deployed, if you want to call it locally from your development machine, for testing purpose, you’ll have to be authenticated.

Read more...